Getting Open Data down from the cloud to create real impact on the ground

In order to transform our food systems and make the way we grow, sell and consume food more sustainable we need data. We need data that is open and online.

Photo by Neil Howard

That means not only ensuring new data is stored and updated in publicly accessible databases, but that older data repositories are harvested from their offline C drives and uploaded also.

But once open data makes it to the cloud, what’s next?

How do we ensure open data actually makes an impact on the ground to benefit those who need it most?

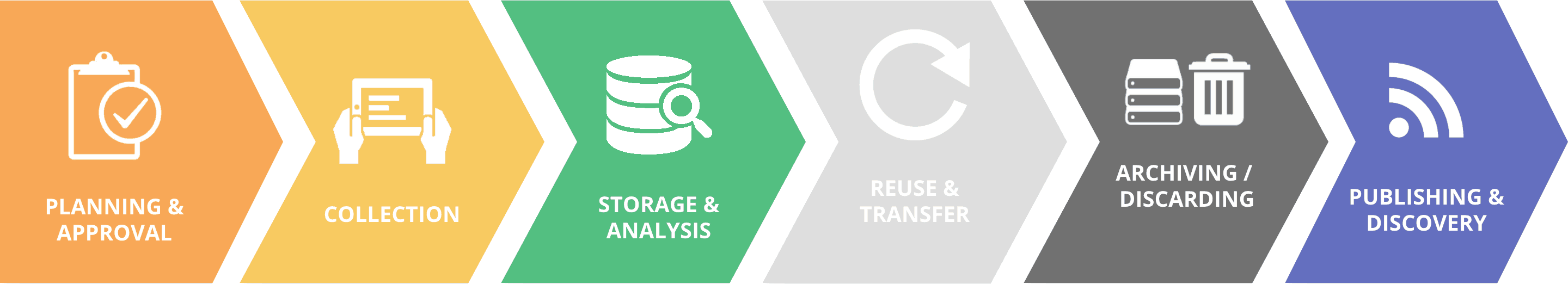

One of the key efforts of the CGIAR Platform for Big Data in Agriculture is to systematically aggregate CGIAR wide data from those hard drives to online data repositories such as AgTrials, Dataverse, and GBIF – to name a few.

However, we are now starting to realize that, in order for data to be accessed and used by the right people, more needs to be done than just unlocking it.

There is a lot of good intent and enough financial resources to enable the much-needed food systems transformation, but progress has been slow going.

It’s clear that only intent and money are not enough.

For open data to be effective, it must also be usable.

Decision and policy makers are key in the journey for the transformation of our food systems, and all this data that is being freed up needs to be accessible for them to easily connect with it.

In January earlier this year, CGIAR and Microsoft co-organized a training session that broached this issue. Speaking of the importance of data literacy and effective communication, Thomas Roca, a Microsoft Data Strategist and guest speaker at the event, said:

“It is sound data infrastructure design and visualization that put insights at the fingertips of policymakers and ultimately makes a data revolution tangible.”

As scientists, we tend to be extremely technical – often unnecessarily over-complicating things. In contrast, the majority of policymakers and other influential industry members are usually more pragmatic in their approach.

Using modern, intuitive data visualization (Data-Viz) frameworks can help bridge this gap, enabling us to tap into the potential of research outputs from CGIAR.

Without falling into the pitfall of information overload, interactive and regular data updates can really help attract and retain the attention of your audience.

Modern Data-Viz frameworks also give the power of data exploration to the data user. From dedicated software, such as PowerBi or Carto, to JavaScript libraries like Highcharts and Leaflets, data enthusiasts from all backgrounds and capabilities can produce appealing data narratives.

We can only imagine the potential impact easy data exploration can have; if it is made available at the fingertips of the right industry influencers and policymakers.

For this to happen, we scientists need to upgrade our skills and learn how to communicate and be in sync with how data flows online – and we can now say, first hand, just how easy it can be.

During January of this year, the Data Intelligence Hub of CIAT-Asia teamed up with Thomas Roca from Microsoft, to hold a workshop to introduce these new Data Visualization concepts and frameworks to both scientific and non-scientific staff from Asia.

A quick survey was done before the course to assess the abilities of the participants. It showed that most of them hated coding, finding it too complicated.

After the workshop, attendees were encouraged to participate in a Datathon during which they were made to produce Data Products. And guess what happened?

With just those three days of training, the workshop participants were able to produce intuitive data visualization products from static data sources and APIs, tell new data stories in an engaging and accessible way.

Here is one example from one of the participants.

With the advent of cloud computing, more and more data tools are now accessible in cloud infrastructure platforms like Microsoft’s Azure.

It has never been so easy to develop and deploy data application, automating data collection and connecting, seamlessly, live data streams to interactive visualization.

Even more traditional platforms like Excel have now embedded some cloud capabilities, allowing many more aspiring data ninjas to sharpen their skills.

To answer the question as to how to get Open Data down from the cloud to make the most impact – we just need good vehicles in the form of great data products and good storytelling tools.

And what is more impacting than a great story?

This article was co-authored with contributions from Burra Dharani Dhar; Data Scientist, Decision and Policy Analysis group; Nguyen Tri Kien, Statistics and Data Management CIAT-Asia’s Data Intelligence Hub in Hanoi, Vietnam; and Thomas Roca, Microsoft Economist and Data Strategist, CELA, in Brussels, Belgium

Blog Competition Entry

This article is published as a part of our publicly open big data blog competition. If you have enjoyed this reading this entry, you can vote by liking, commenting or sharing.

The power of data can be unveiled only when it meets the perfect data story teller. Data journalists and story tellers can make use of the open data to create more appealing, interactive data stories and visualizations which supports in bridging the big data divide.

Indeed, just making the data accessible is not enough. As you point out, good visualisation might make it easier to understand the data, but we also need to see if we truly reach all of the audience that might benefit from this data. Often we say our research is for the benefit of smallholder farmers, but for them, it requires more: a middle-person to interpret the data and transforming it into practical information a smallholder farmer (as example) can actually use.